BIG IDEAS

Big Chemistry Consortium

The transition to a circular and sustainable economy requires a transformation of chemistry. Radical new approaches are necessary to deliver this transformation in a short time span. In the past decade, automation and artificial intelligence have transformed many industries. However, the introduction of artificial intelligence in the chemical sciences lags behind.

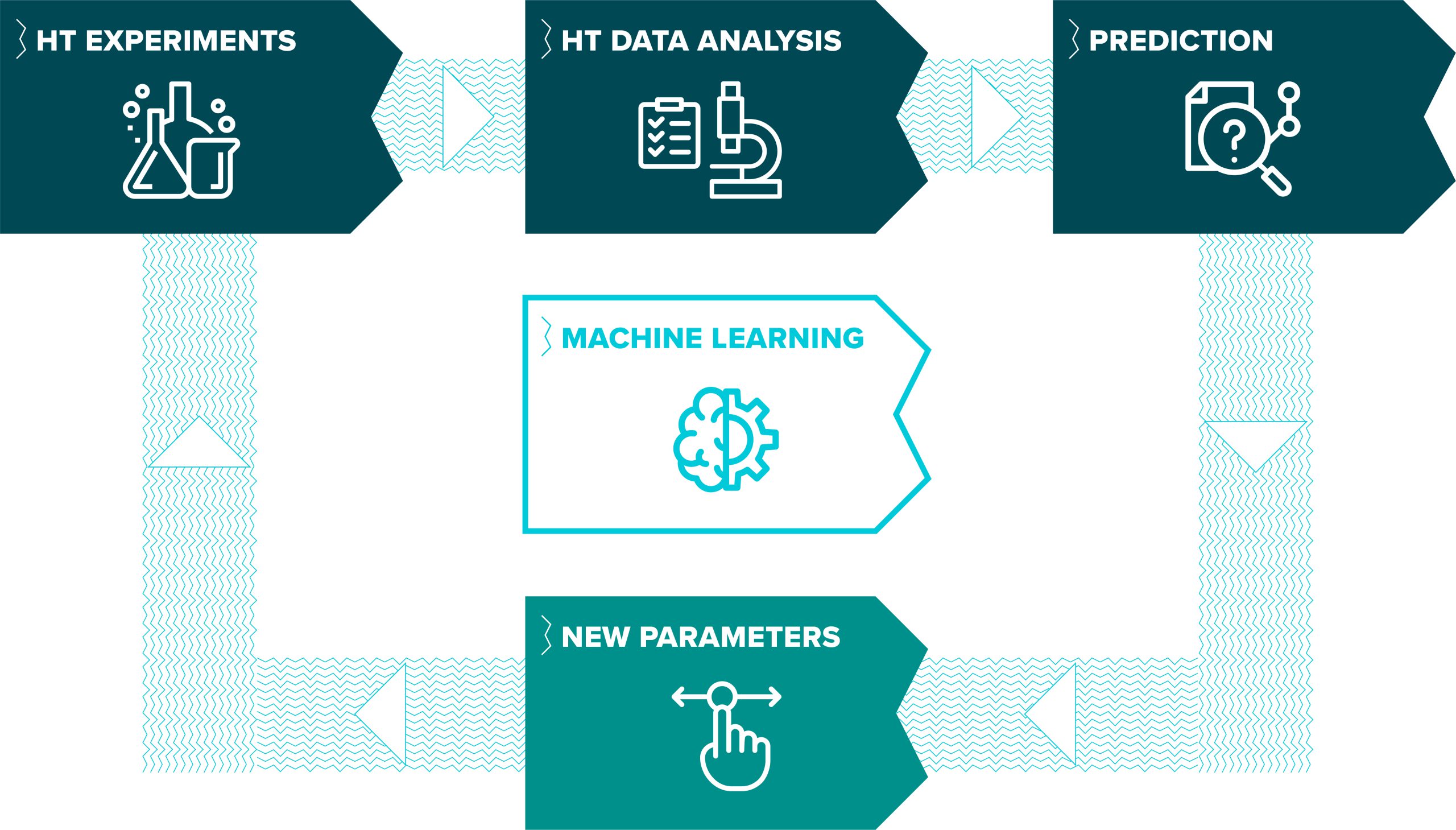

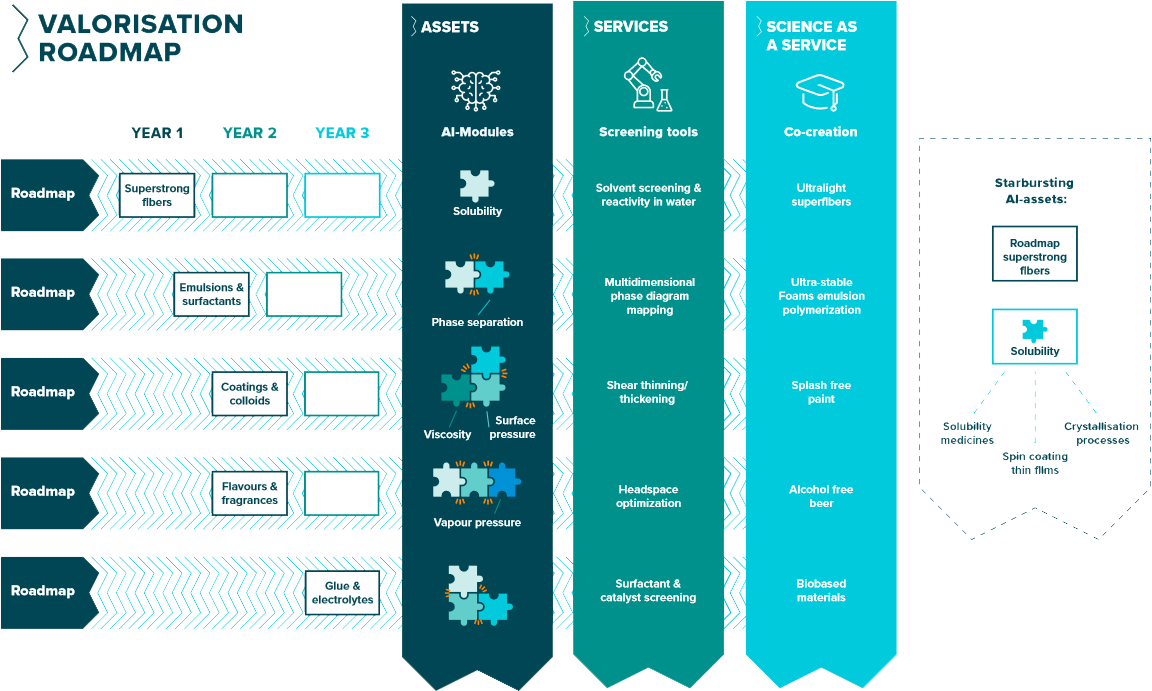

The Big Chemistry consortium will develop a ‘big data’ approach to chemical research. We will build a fully functional ‘RobotLab’, greatly increasing the number of experiments that can be carried out and analysed using novel Bayesian optimisations. Building on high quality data from the RobotLab, we will train new AI algorithms to predict the physical properties of molecular systems, such as solubility, phase separation, critical micelle concentration or reaction rates, and predict the emergent properties of complex molecular systems. Together with industrial partners, we will exploit the RobotLab to transform the formulation of complex mixtures from an art into science-based technology.

The RobotLab

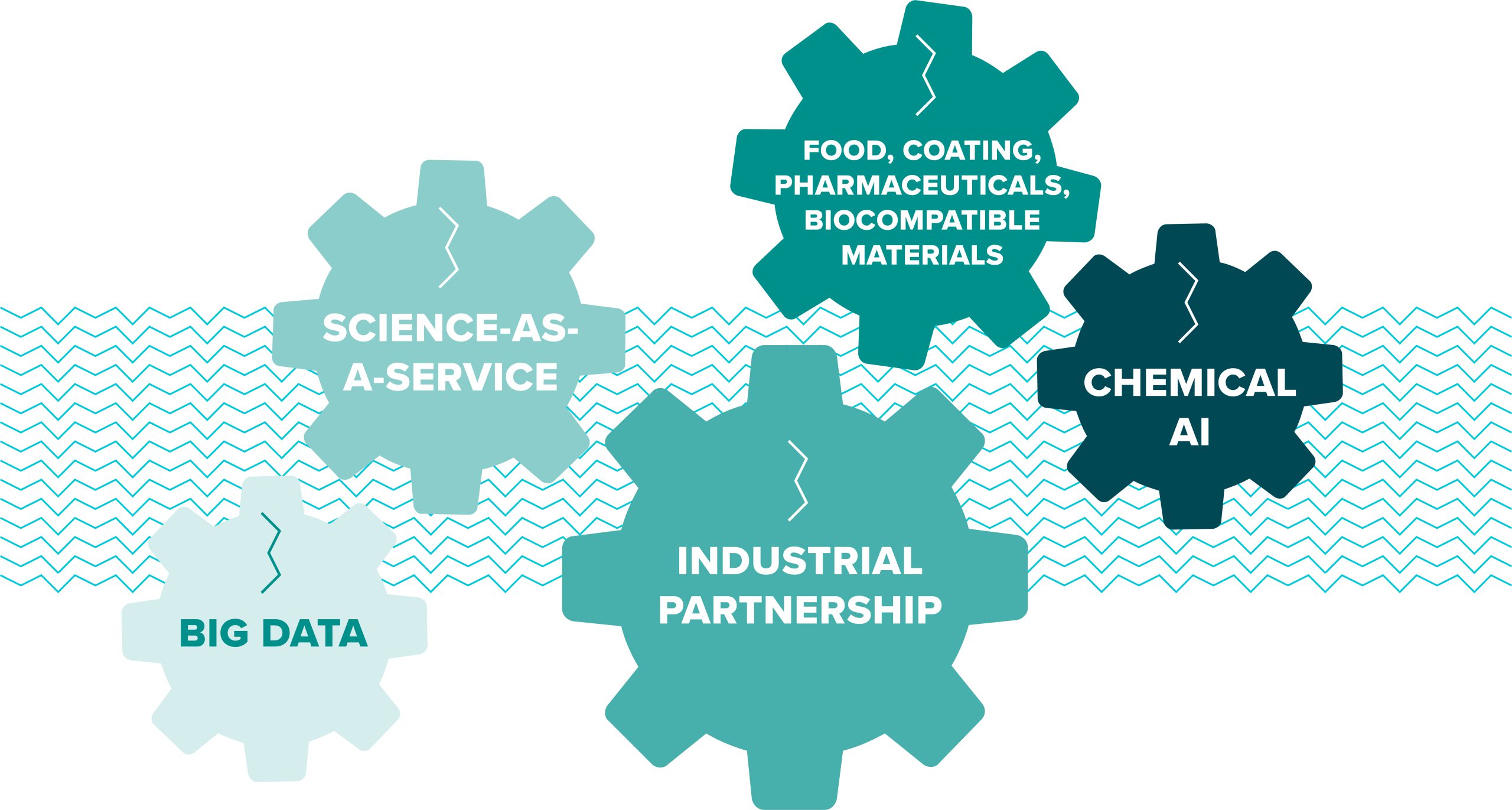

The RobotLab will be a (inter)national facility for accelerated discovery of solutions for complex molecular systems. In the initial phase (2023-2027) an academic consortium will develop chemical AI and build large data sets on physical properties of molecular systems by integrating robotics and analytics. The consortium will develop industrial partnerships for accelerating research on complex formulation problems in food and beverages, paints, and coatings, as well as pharmaceuticals/agrichemical and biocompatible materials. In the second phase (2027 onwards) we will develop the RobotLab as a commercial international entity. We strive to build an ecosystem of excellence in chemical AI and envision a wide range of collaboration with private partners, which can include open innovation projects, ‘science-as-a service’, contract research, or the creation of start-ups. Next to international companies, the RobotLab has a strong connection with the Max Planck Gesellschaft and the Helmholtz Gemeinschaft to create a European impact.

Consortium

Leading academic partners & lead principal investigators are:

- Radboud University Dr. Evan Spruijt

- Eindhoven University of Technology Dr. Ghislaine Vantomme

- University of Groningen Prof. Nathalie Katsonis

- physics institute AMOLF Prof. Wim Noorduin

- Fontys University of Applied Science Dr. Teade Punter

- Advisor Prof. Wilhelm Huck

Scheme 1 Illustration of a ‘valorisation roadmap’, showing how small, fundamental science projects provide the ‘puzzle pieces’ for larger, integrate projects with ever more specialized applications.

Collaboration & IP

For collaborative projects with industry, project-specific agreements will be made in separate collaboration agreements (by using existing models of NWO a.o.). The Big Chemistry consortium will work with an ‘open innovation’ model, where participating companies within the RobotLab will be able to work in a ‘closed area’ where other parties will not have access to sensitive data. Data from industrial partners will be “anonymized” and properly shielded from other users. The premise is that the datasets that drive the RobotLab and enable chemical AI give the RobotLab a unique competitive position and will therefore play a central role in the future commercial development of the RobotLab. A single overarching data strategy is being pursued, based on the general notion that data is a key ‘asset’ of automated labs.

Focus areas, Revolutionizing Chemical

Technology via Molecular-Level

Understanding of Chemical Mixtures

Modern chemical technology makes extensive use of formulated products ranging from drug delivery, agrochemicals, flavourings, fragrances, surfactants and resins. These products are traditionally created and optimised using trial and error, an inefficient and costly process. Understanding how to engineer formulations for specialised use cases based on fundamental molecular knowledge would thus provide avenues to consolidate routes from requirements to products, as well as a set of powerful tools for the design of novel systems. A significant barrier to such design at the compositional level is understanding how to account for the ensembles of chemical interactions which contribute to desirable complex properties. Furthermore, under the non-ideal conditions present in formulations, properties of compounds measured in their pure form cannot be taken for granted.

Whilst the underlying molecular level physical chemistry of solubility, vapour pressure, micelle formation or phase separation are well understood, it is not possible to calculate accurate quantitative predictions of these properties, either as pure substances or in mixtures. Thus, the pathway to implementing automated synthesis routes or introducing novel, advanced compounds into new chemical technologies is long and inefficient. If it were possible to predict kinetics of chemical reactions, or how a given compound will contribute to the properties of a formulation in advance of sample preparation, the design-experiment-evaluate cycle could be significantly consolidated.

The Big Chemistry consortium will combine high-throughput, multivariate data collection and machine learning (ML)/artificial intelligence (AI) methods to tackle the challenge of establishing concepts and principles for the molecular level design of complex mixtures. Early milestones in this work will be to develop ML models capable of predicting key properties of solubility, surface tension and reaction kinetics.